반응형

2021.08.19 - [Study/Study group] - [Spark-Study] Day-6

저번 시간에 55p 실습하다 잘 안되는 부분 다시 츄라이~

spark-shell을 통해 코딩!

terrypark ~ master

spark-shell

21/08/26 10:19:58 WARN Utils: Your hostname, acetui-MacBookPro.local resolves to a loopback address: 127.0.0.1; using 172.27.114.231 instead (on interface en0)

21/08/26 10:19:58 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/usr/local/Cellar/apache-spark/3.1.2/libexec/jars/spark-unsafe_2.12-3.1.2.jar) to constructor java.nio.DirectByteBuffer(long,int)

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

21/08/26 10:19:58 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://172.27.114.231:4040

Spark context available as 'sc' (master = local[*], app id = local-1629940803068).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.1.2

/_/

Using Scala version 2.12.10 (OpenJDK 64-Bit Server VM, Java 11.0.10)

Type in expressions to have them evaluated.

Type :help for more information.

scala> import org.apache.spark.sql.functions._

import org.apache.spark.sql.functions._새로운 스파크 세션이기 때문에 jsonFile등 다시 넣어준다.

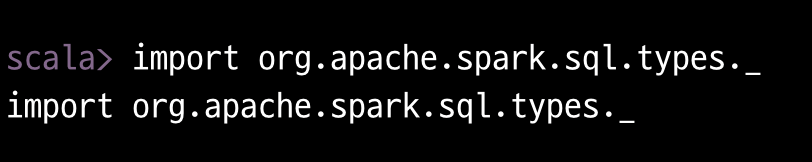

필요시 라이브러리는 import해준다.

아래처럼 컬럼의 조건에 따라 데이터를 가져올 수 있다.

위에서는 스키마와 컬럼에 대해 이야기하고 실습을 해보았다.

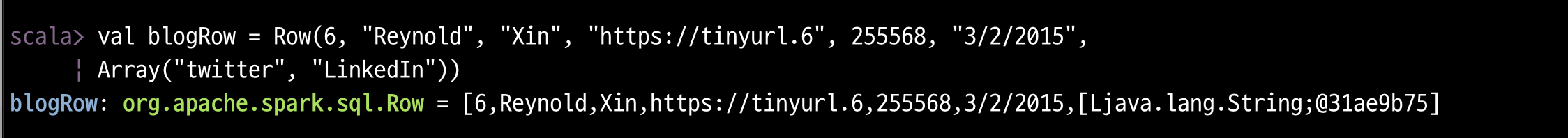

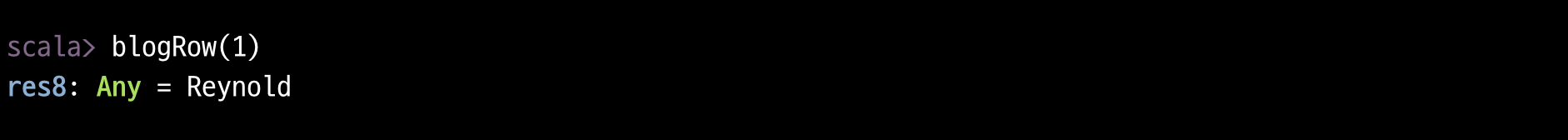

이제 Raw Object를 살펴보자.

rows를 생성하고 그 rows를 데이터프레임 Author, State로 묶어보자.

DataFrame에 대해서 알아보자(갑자기?)

DataFrameReader and DataFrameWriter를 사용해보면

/Users/terrypark/Joy/LearningSparkV2/chapter3/data/sf-fire-calls.csv

val sampleDF = spark .read.option("samplingRatio", 0.001) .option("header", true) .csv("""/Users/terrypark/Joy/LearningSparkV2/chapter3/data/sf-fire-calls.csv""")

fireSchema 생성.

스크린샷보다 복사가능하게끔 코드로! ㅋㅋ

scala> val sf_fire_file ="/Users/terrypark/Joy/LearningSparkV2/chapter3/data/sf-fire-calls.csv"

sf_fire_file: String = /Users/terrypark/Joy/LearningSparkV2/chapter3/data/sf-fire-calls.csv

scala> val fireDF = spark.read.schema(fireSchema).option("header", "true").csv(sf_fire_file)

fireDF: org.apache.spark.sql.DataFrame = [CallNumber: int, UnitID: string ... 26 more fields]저장 할 Parquetfile의 경로를 지정.

scala> val parquetPath = "/Users/terrypark/Joy/LearningSparkV2/acet"

parquetPath: String = /Users/terrypark/Joy/LearningSparkV2/acet

scala> fireDF.write.format("parquet").save(parquetPath)

21/08/26 11:08:04 WARN package: Truncated the string representation of a plan since it was too large. This behavior can be adjusted by setting 'spark.sql.debug.maxToStringFields'.

21/08/26 11:08:05 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 95.00% for 8 writers

21/08/26 11:08:05 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 84.44% for 9 writers

21/08/26 11:08:05 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 76.00% for 10 writers

21/08/26 11:08:05 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 69.09% for 11 writers

21/08/26 11:08:06 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 76.00% for 10 writers

21/08/26 11:08:06 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 84.44% for 9 writers

21/08/26 11:08:06 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 95.00% for 8 writers경로를 가보면 아래와 같이 저장이 되어있는것을 확인할 수 있다.

table명을 지정하고 그 테이블명을 저장 시킨다.

scala> val parquetTable ="aceTable"

parquetTable: String = aceTable

scala> fireDF.write.format("parquet").saveAsTable(parquetTable)

21/08/26 11:10:52 WARN HiveConf: HiveConf of name hive.stats.jdbc.timeout does not exist

21/08/26 11:10:52 WARN HiveConf: HiveConf of name hive.stats.retries.wait does not exist

21/08/26 11:10:56 WARN ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 2.3.0

21/08/26 11:10:56 WARN ObjectStore: setMetaStoreSchemaVersion called but recording version is disabled: version = 2.3.0, comment = Set by MetaStore terrypark@127.0.0.1

21/08/26 11:10:56 WARN ObjectStore: Failed to get database default, returning NoSuchObjectException

21/08/26 11:10:57 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 95.00% for 8 writers

21/08/26 11:10:57 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 84.44% for 9 writers

21/08/26 11:10:57 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 76.00% for 10 writers

21/08/26 11:10:57 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 69.09% for 11 writers

21/08/26 11:10:57 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 76.00% for 10 writers

21/08/26 11:10:57 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 84.44% for 9 writers

21/08/26 11:10:57 WARN MemoryManager: Total allocation exceeds 95.00% (1,020,054,720 bytes) of heap memory

Scaling row group sizes to 95.00% for 8 writers

21/08/26 11:10:58 WARN SessionState: METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

21/08/26 11:10:58 WARN HiveConf: HiveConf of name hive.internal.ss.authz.settings.applied.marker does not exist

21/08/26 11:10:58 WARN HiveConf: HiveConf of name hive.stats.jdbc.timeout does not exist

21/08/26 11:10:58 WARN HiveConf: HiveConf of name hive.stats.retries.wait does not existscala> val fewFireDF = fireDF.select("IncidentNumber", "AvailableDtTm", "CallType").where($"CallType" =!= "Medical Incident")

fewFireDF: org.apache.spark.sql.Dataset[org.apache.spark.sql.Row] = [IncidentNumber: int, AvailableDtTm: string ... 1 more field]

scala> fewFireDF.show(5, false)

+--------------+----------------------+--------------+

|IncidentNumber|AvailableDtTm |CallType |

+--------------+----------------------+--------------+

|2003235 |01/11/2002 01:51:44 AM|Structure Fire|

|2003250 |01/11/2002 04:16:46 AM|Vehicle Fire |

|2003259 |01/11/2002 06:01:58 AM|Alarms |

|2003279 |01/11/2002 08:03:26 AM|Structure Fire|

|2003301 |01/11/2002 09:46:44 AM|Alarms |

+--------------+----------------------+--------------+

only showing top 5 rows61p까지 완료

다음시간에는 62p부터!

반응형

'Study > Study group' 카테고리의 다른 글

| 제 4장 Vue.js 기초 이론 (0) | 2021.09.07 |

|---|---|

| [Spark-Study] Day-8 스파크 리마인드 (0) | 2021.09.02 |

| [Spark-Study] Day-6 DataFrame Api (0) | 2021.08.19 |

| EPI group (0) | 2021.07.27 |

| [Spark-Study] Day-2 예제 돌려보기 (3) | 2021.06.24 |