반응형

2021.06.14 - [Study/Study group] - [Spark-Study] Day-1

2021.06.24 - [Study/Study group] - [Spark-Study] Day-2

2021.07.01 - [BigDATA/spark] - [Spark-Study] Day-3

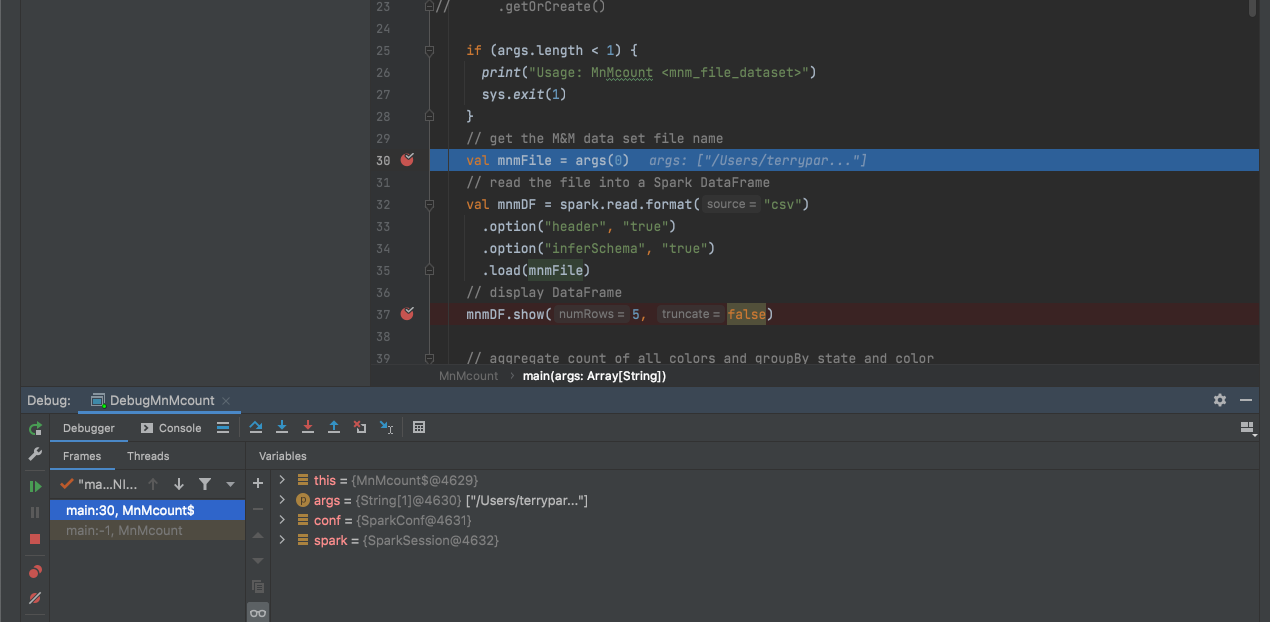

spark local debugging 해보기

코드상으로는 아래의 내용이 추가 된다.

val conf = new SparkConf().setAppName("MnMCount")

conf.setIfMissing("spark.master", "local[*]")

val spark = SparkSession

.builder

.config(conf)

.getOrCreate()

// val spark = SparkSession

// .builder

// .appName("MnMCount")

// .getOrCreate()

실행의 경우 Debugging용 Application을 하나 추가한다.

그리고나서 벌레모양 아이콘을 꾹~눌러준다

아래처럼 브레이크 포인트가 잡히는걸 볼수가 있다.

참고 사이트 : https://supergloo.com/spark-scala/debug-scala-spark-intellij/

- Local Spark Debugging

- Remote Spark Debugging

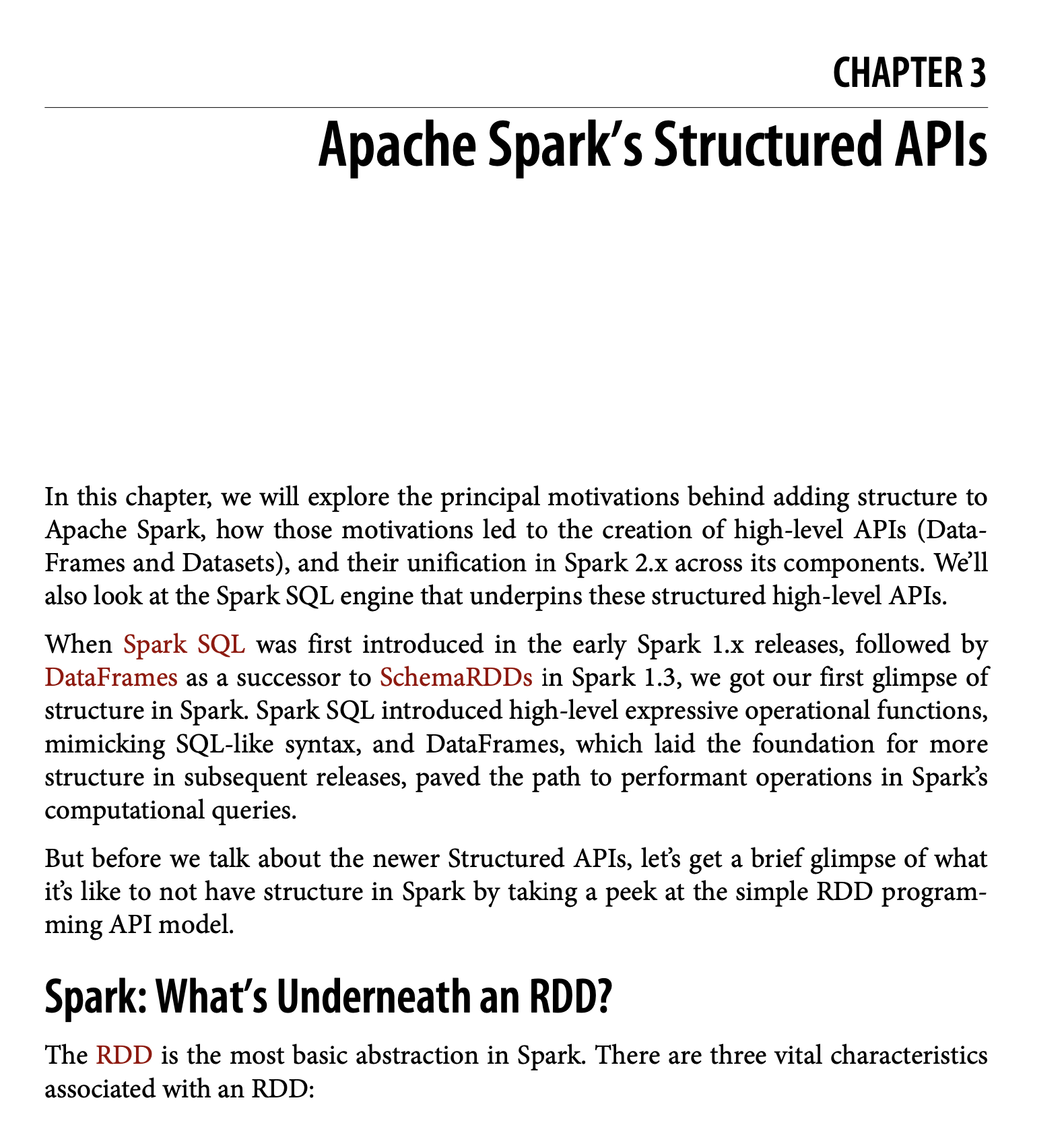

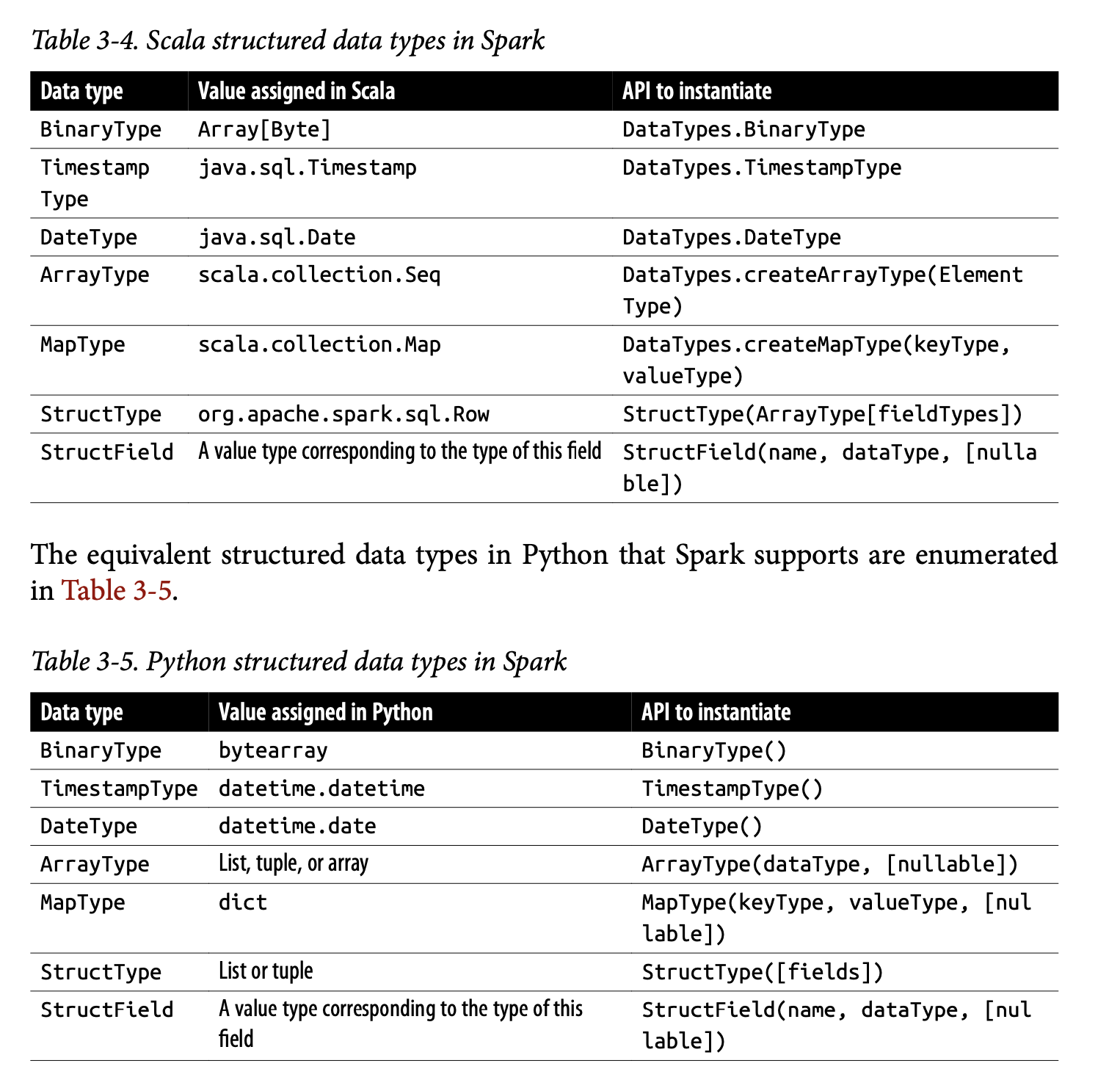

챕터 3 스터디(읽고 토론)

spark basic Data types

cd /usr/local/Cellar/apache-spark/3.1.2

terrypark /usr/local/Cellar/apache-spark/3.1.2

ll

total 192

-rw-r--r-- 1 terrypark admin 1.3K 7 29 13:45 INSTALL_RECEIPT.json

-rw-r--r-- 1 terrypark admin 23K 5 24 13:45 LICENSE

-rw-r--r-- 1 terrypark admin 56K 5 24 13:45 NOTICE

-rw-r--r-- 1 terrypark admin 4.4K 5 24 13:45 README.md

drwxr-xr-x 13 terrypark admin 416B 7 29 13:45 bin

drwxr-xr-x 14 terrypark admin 448B 5 24 13:45 libexec

terrypark /usr/local/Cellar/apache-spark/3.1.2

./bin/spark-shell

21/08/05 10:38:03 WARN Utils: Your hostname, acetui-MacBookPro.local resolves to a loopback address: 127.0.0.1; using ip instead (on interface en0)

21/08/05 10:38:03 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/usr/local/Cellar/apache-spark/3.1.2/libexec/jars/spark-unsafe_2.12-3.1.2.jar) to constructor java.nio.DirectByteBuffer(long,int)

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

21/08/05 10:38:03 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

21/08/05 10:38:08 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

Spark context Web UI available at http://ip:4041

Spark context available as 'sc' (master = local[*], app id = local-1628127488293).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.1.2

/_/

Using Scala version 2.12.10 (OpenJDK 64-Bit Server VM, Java 11.0.10)

Type in expressions to have them evaluated.

Type :help for more information.

scala> import org.apache.spark.sql.types._

import org.apache.spark.sql.types._

scala> val nameTypes = StringType

nameTypes: org.apache.spark.sql.types.StringType.type = StringType

scala> val firstName = nameTypes

firstName: org.apache.spark.sql.types.StringType.type = StringType

scala> val lastName = nameTypes

lastName: org.apache.spark.sql.types.StringType.type = StringType아래 테이블 참고! 데이터 타입~

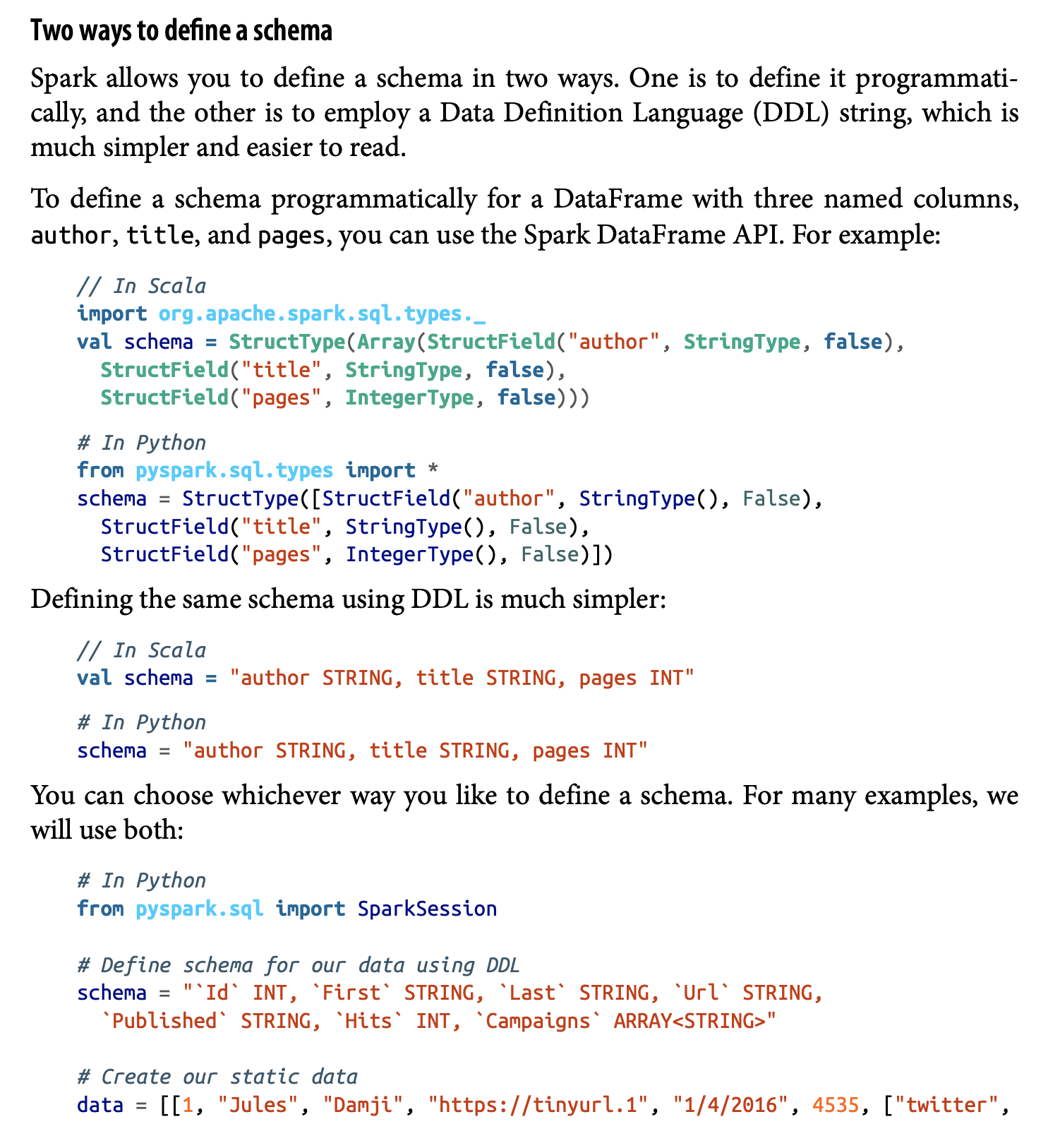

위처럼 너무 복잡스러워서 Schema가 나온다.

스키마를 정의 하는데는 2가지 방법이 있다.

관련 github : https://github.com/databricks/LearningSparkV2

next study : 챕터 3 코드 작성 후 실행

반응형

'Data Platform > Spark' 카테고리의 다른 글

| Spark - RDD? (0) | 2022.03.03 |

|---|---|

| [Spark-Study] Day-5 인텔리제이에서 실습 (0) | 2021.08.12 |

| Upgrade IntelliJ IDEA for Big Data Tool Plug-In & running spark! (0) | 2021.07.29 |

| [Spark-Study] Day-3 스파크 예제를 위한 셋팅 (2) | 2021.07.01 |

| [error] SERVER ERROR: Service Unavailable url=블라블라 (0) | 2021.06.14 |