p31

The Spark UI

저번시간 스파크 셋팅 이후

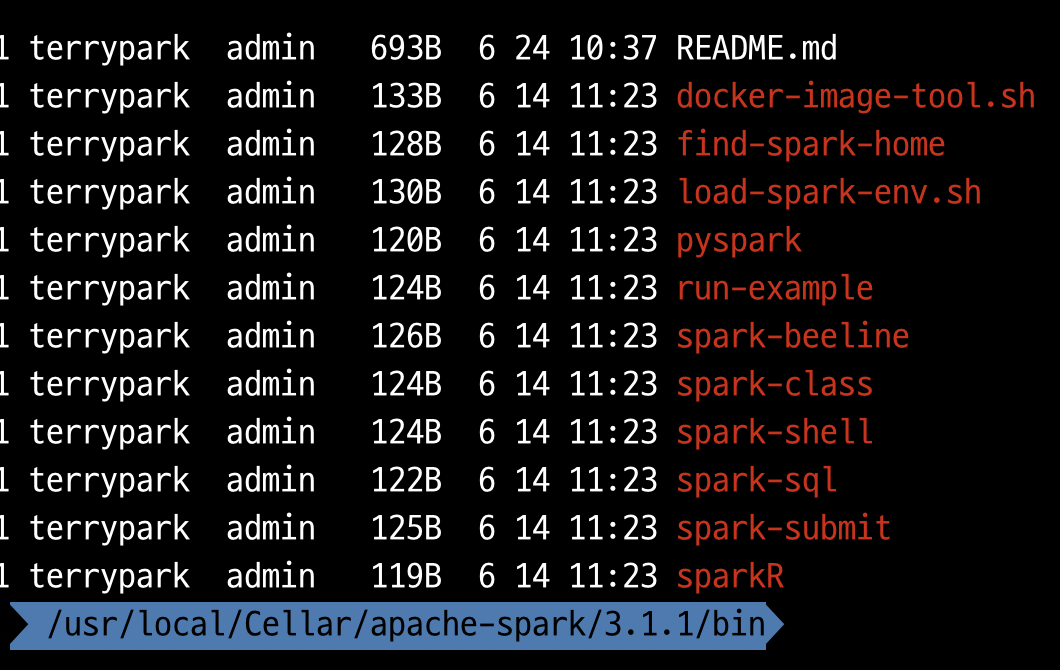

cd /usr/local/Cellar/apache-spark/3.1.1/bin에 가서

spark-shell을 실행 시켜준다.

spark-shell

아래와 같이 환영 해줌! ㅋㅋ

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://아이피나옴:4040

Spark context available as 'sc' (master = local[*], app id = local-1624457045234).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.1.1

/_/

Using Scala version 2.12.10 (OpenJDK 64-Bit Server VM, Java 11.0.10)

Type in expressions to have them evaluated.

Type :help for more information.

scala>위에서 Web UI를 사용할 수 있다고 나오는데 저것이 바로 Spark UI이다!

아래의 내용들을 알수 있다.

pyton을 사용하고 싶다면 pyspark를 실행하면 된다. 또한 spark ui를 제공한다.

예제 참고 : https://github.com/databricks/LearningSparkV2

위의 예제 참고에서 README.md의 내용을 아래의 경로에 만들어준다.

Learning Spark 2nd Edition

Welcome to the GitHub repo for Learning Spark 2nd Edition.

Chapters 2, 3, 6, and 7 contain stand-alone Spark applications. You can build all the JAR files for each chapter by running the Python script: python build_jars.py. Or you can cd to the chapter directory and build jars as specified in each README. Also, include $SPARK_HOME/bin in $PATH so that you don't have to prefix SPARK_HOME/bin/spark-submit for these standalone applications.

For all the other chapters, we have provided notebooks in the notebooks folder. We have also included notebook equivalents for a few of the stand-alone Spark applications in the aforementioned chapters.

Have Fun, Cheers!

그런 뒤 아래와 같이 수행!

run-example JavaWordCount README.md

아래와 같이 카운트가 된다.

Have: 1

build: 2

stand-alone: 2

chapters,: 1

of: 1

chapters.: 1

prefix: 1

by: 1

chapter: 2

$PATH: 1

aforementioned: 1

JAR: 1

6,: 1

2,: 1

so: 1

Or: 1

and: 2

included: 1pytion 예제 돌려보기

mnmcount.py

from __future__ import print_function

import sys

from pyspark.sql import SparkSession

if __name__ == "__main__":

if len(sys.argv) != 2:

print("Usage: mnmcount <file>", file=sys.stderr)

sys.exit(-1)

spark = (SparkSession

.builder

.appName("PythonMnMCount")

.getOrCreate())

# get the M&M data set file name

mnm_file = sys.argv[1]

# read the file into a Spark DataFrame

mnm_df = (spark.read.format("csv")

.option("header", "true")

.option("inferSchema", "true")

.load(mnm_file))

mnm_df.show(n=5, truncate=False)

# aggregate count of all colors and groupBy state and color

# orderBy descending order

count_mnm_df = (mnm_df.select("State", "Color", "Count")

.groupBy("State", "Color")

.sum("Count")

.orderBy("sum(Count)", ascending=False))

# show all the resulting aggregation for all the dates and colors

count_mnm_df.show(n=60, truncate=False)

print("Total Rows = %d" % (count_mnm_df.count()))

# find the aggregate count for California by filtering

ca_count_mnm_df = (mnm_df.select("*")

.where(mnm_df.State == 'CA')

.groupBy("State", "Color")

.sum("Count")

.orderBy("sum(Count)", ascending=False))

# show the resulting aggregation for California

ca_count_mnm_df.show(n=10, truncate=False)

spark.stop()mnm_dataset.csv

https://raw.githubusercontent.com/databricks/LearningSparkV2/master/chapter2/py/src/data/mnm_dataset.csv

명령어 수행

spark-submit mnmcount.py mnm_dataset.csv

21/06/24 10:49:35 INFO TaskSetManager: Finished task 199.0 in stage 9.0 (TID 606) in 12 ms on 172.27.114.231 (executor driver) (200/200)

21/06/24 10:49:35 INFO TaskSchedulerImpl: Removed TaskSet 9.0, whose tasks have all completed, from pool

21/06/24 10:49:35 INFO DAGScheduler: ResultStage 9 (showString at NativeMethodAccessorImpl.java:0) finished in 0.561 s

21/06/24 10:49:35 INFO DAGScheduler: Job 5 is finished. Cancelling potential speculative or zombie tasks for this job

21/06/24 10:49:35 INFO TaskSchedulerImpl: Killing all running tasks in stage 9: Stage finished

21/06/24 10:49:35 INFO DAGScheduler: Job 5 finished: showString at NativeMethodAccessorImpl.java:0, took 0.772106 s

+-----+------+----------+

|State|Color |sum(Count)|

+-----+------+----------+

|CA |Yellow|100956 |

|CA |Brown |95762 |

|CA |Green |93505 |

|CA |Red |91527 |

|CA |Orange|90311 |

|CA |Blue |89123 |

+-----+------+----------+이번에는 스칼라로 해보자!

그런데..!

파이썬하고는 다르게 빌드 시켜줘야함!

brew install sbt

/usr/local/Cellar/sbt/1.5.2

build.sbt

//name of the package

name := "main/scala/chapter2"

//version of our package

version := "1.0"

//version of Scala

scalaVersion := "2.12.10"

// spark library dependencies

// change this to 3.0.0 when released

libraryDependencies ++= Seq(

"org.apache.spark" %% "spark-core" % "3.0.0-preview2",

"org.apache.spark" %% "spark-sql" % "3.0.0-preview2"

)/usr/local/Cellar/apache-spark/3.1.1/bin/target/scala-2.12/main-scala-chapter2_2.12-1.0.jar

spark-submit --class main.scala.chapter2.MnMcount \ /usr/local/Cellar/apache-spark/3.1.1/bin/target/scala-2.12/main-scala-chapter2_2.12-1.0.jar mnm_dataset.csv

오류 발생 - 패지키쪽 안맞는듯!

Error: Failed to load class main.scala.chapter2.MnMcount.

다음 스터디 - 다음주 목요일!

39p scala 버전 돌려보기

인털리J 에서 동작하도록 설정 해보기

'Study > Study group' 카테고리의 다른 글

| [Spark-Study] Day-6 DataFrame Api (0) | 2021.08.19 |

|---|---|

| EPI group (0) | 2021.07.27 |

| [Spark-Study] Day-1 스파크 셋팅 (0) | 2021.06.14 |

| k8s 스터디 흔적 (0) | 2020.10.22 |

| 2020.07.16 스터디 (0) | 2020.07.16 |